tinyScenegraph driving clusters

Friends of tinySG,

like most scene graphs, tinySG has no inherent support for distributed

rendering in a cluster environment. However, there are two distinct

network layers operating on top of tinySG: eqcsg, based on

the Equalizer (TM) server and

tsgmpi, a peer

to peer implementation on the foundation of the message passing interface

(MPI). Both layers have strengths and weaknesses and come in separate

libraries with dependencies to the tinySG core renderer and Equalizer

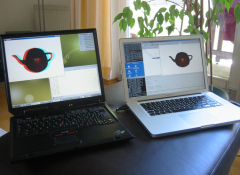

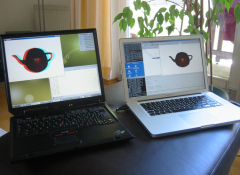

or MPI, respectively. The image to the right shows a "mobile cluster" of

two nodes, running tsgEdit on Windows XP as the control host and a Linux

render slave displaying the same view in anaglyph stereo.

like most scene graphs, tinySG has no inherent support for distributed

rendering in a cluster environment. However, there are two distinct

network layers operating on top of tinySG: eqcsg, based on

the Equalizer (TM) server and

tsgmpi, a peer

to peer implementation on the foundation of the message passing interface

(MPI). Both layers have strengths and weaknesses and come in separate

libraries with dependencies to the tinySG core renderer and Equalizer

or MPI, respectively. The image to the right shows a "mobile cluster" of

two nodes, running tsgEdit on Windows XP as the control host and a Linux

render slave displaying the same view in anaglyph stereo.

eqcsg strongly benefits from Equalizers feature richness, most importantly

- Driving multi-screen display walls from clustered nodes or multi-pipe

machines (a dual GPU machine with ATI's EyeFinity could drive

up to 12 displays/projectors).

- Utilise multi-GPU systems to speed up rendering on a

single display device, similar to what Crossfire or SLI does, but

maximising performance by using render domain knowledge.

- Scalable OpenGL rendering using Stereo-, Screenspace- and

DPLEX-decomposition. The decomposition schemes may be combined in

a hierarchy to e.g. concentrate the power of eight GPUs on four

projectors driving a two-segment passive-stereo wall.

- Drive tracked installations, like Caves or L-shaped walls.

- Support for Ethernet and Infiniband hardware.

Each cluster is configured using a single ASCII configuration file. tinySG makes

the setup relatively easy as it comes with a 3D cluster configurator:

Connecting hosts to one another, GPUs to projectors or splitting work load

amongst available resources is as easy as plugging cables to their physical

counterparts. Each physical entity is modeled in a 3D representation the

user can navigate through. Connections are established by a drag 'n drop

interface in the scene, animations show the data flow through the cluster

network. This way it is easy to see which GPU drives which projector,

which machine has unused resources that may support other resources, etc.

See the image on the left to get an impression of the data flow in a 4-host,

6-GPU, 3-segment cluster configuration.

Each cluster is configured using a single ASCII configuration file. tinySG makes

the setup relatively easy as it comes with a 3D cluster configurator:

Connecting hosts to one another, GPUs to projectors or splitting work load

amongst available resources is as easy as plugging cables to their physical

counterparts. Each physical entity is modeled in a 3D representation the

user can navigate through. Connections are established by a drag 'n drop

interface in the scene, animations show the data flow through the cluster

network. This way it is easy to see which GPU drives which projector,

which machine has unused resources that may support other resources, etc.

See the image on the left to get an impression of the data flow in a 4-host,

6-GPU, 3-segment cluster configuration.

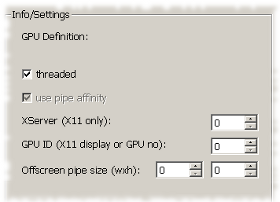

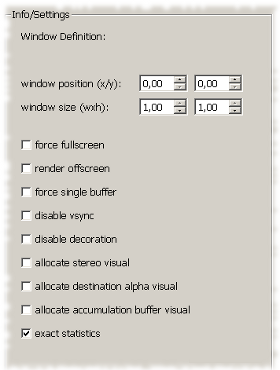

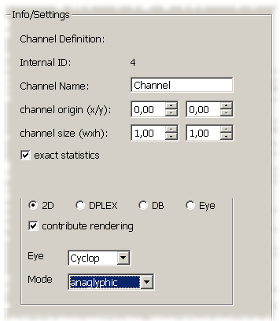

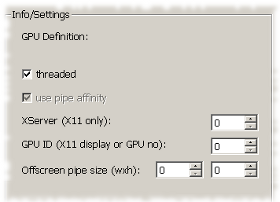

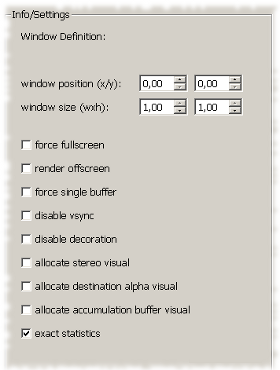

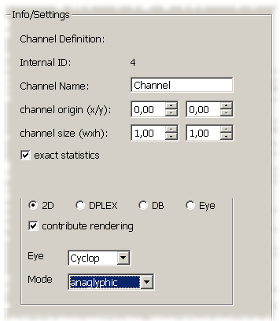

Unfortunately, the tremendous flexibility of Equalizer introduces some

complexity to the configuration of a cluster. For example, controlling a

multi-projector display wall requires information on the wall dimensions,

it's segmentation into projection areas, and on hosts/GPUs driving the

projectors or panels. eqcsg's

configurator addresses this issue by providing context sensitive

configuration tabs on the right dialog side, as shown below. Picking into

the 3D view of the projection system will select either host or wall in

the corresponding list and show it's properties in the info/settings area.

|

|

|

|

|

From left to right: Configuration tabs for walls, GPUs, windows and channels (render areas inside a window).

|

In order to ease the creation of alternative configurations, both the wall

definitions and the cluster machines may be saved to separate files for

later reuse.

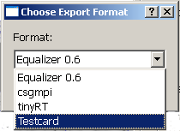

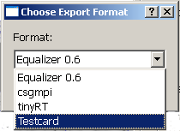

Once a setup is completed, it may be saved in a neutral format or

exported for use with the different cluster layers of tinySG. A special

option is to create a testcard pattern optimised for the given setup:

This option creates a tinySG scene with regular, real-world sized grids

and circles that can be loaded into a cluster session, allowing for

measurements of projection distortions, blending areas or aspect ratio

with a ruler on the physical wall.

Once a setup is completed, it may be saved in a neutral format or

exported for use with the different cluster layers of tinySG. A special

option is to create a testcard pattern optimised for the given setup:

This option creates a tinySG scene with regular, real-world sized grids

and circles that can be loaded into a cluster session, allowing for

measurements of projection distortions, blending areas or aspect ratio

with a ruler on the physical wall.

Keep rendering,

Christian

|

like most scene graphs, tinySG has no inherent support for distributed

rendering in a cluster environment. However, there are two distinct

network layers operating on top of tinySG: eqcsg, based on

the Equalizer (TM) server and

tsgmpi, a peer

to peer implementation on the foundation of the message passing interface

(MPI). Both layers have strengths and weaknesses and come in separate

libraries with dependencies to the tinySG core renderer and Equalizer

or MPI, respectively. The image to the right shows a "mobile cluster" of

two nodes, running tsgEdit on Windows XP as the control host and a Linux

render slave displaying the same view in anaglyph stereo.

like most scene graphs, tinySG has no inherent support for distributed

rendering in a cluster environment. However, there are two distinct

network layers operating on top of tinySG: eqcsg, based on

the Equalizer (TM) server and

tsgmpi, a peer

to peer implementation on the foundation of the message passing interface

(MPI). Both layers have strengths and weaknesses and come in separate

libraries with dependencies to the tinySG core renderer and Equalizer

or MPI, respectively. The image to the right shows a "mobile cluster" of

two nodes, running tsgEdit on Windows XP as the control host and a Linux

render slave displaying the same view in anaglyph stereo.

Once a setup is completed, it may be saved in a neutral format or

exported for use with the different cluster layers of tinySG. A special

option is to create a testcard pattern optimised for the given setup:

This option creates a tinySG scene with regular, real-world sized grids

and circles that can be loaded into a cluster session, allowing for

measurements of projection distortions, blending areas or aspect ratio

with a ruler on the physical wall.

Once a setup is completed, it may be saved in a neutral format or

exported for use with the different cluster layers of tinySG. A special

option is to create a testcard pattern optimised for the given setup:

This option creates a tinySG scene with regular, real-world sized grids

and circles that can be loaded into a cluster session, allowing for

measurements of projection distortions, blending areas or aspect ratio

with a ruler on the physical wall.